Algorithmic Bias vs. Data Bias: A Deep Dive

When people talk about bias in artificial intelligence (AI) and machine learning (ML), they often throw around terms like algorithmic bias and data bias as if they mean the same thing. But they don’t.

They are related, yes — but they emerge from different sources, behave differently, and require different solutions.

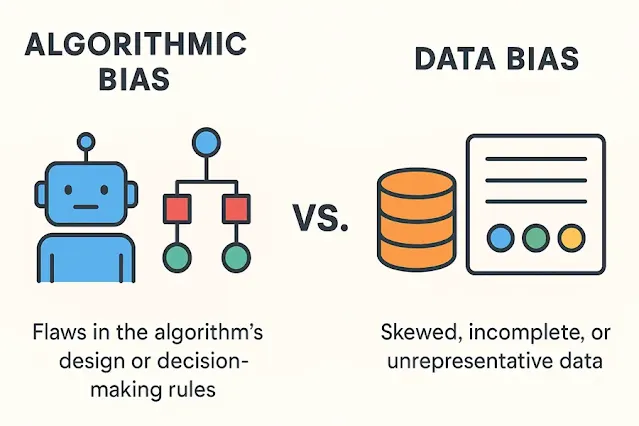

Algorithmic bias comes from flaws in an AI’s design or decision-making rules, while data bias arises from skewed, incomplete, or unrepresentative training data.

Learn how they differ, how they interact, and ways to fix each for fairer AI results.

|

| Algorithmic bias comes from flawed design; data bias comes from skewed data. |

Introduction — Why Understanding the Difference Matters

Imagine you build a hiring AI that screens job applications. After a few months, you find it is rejecting more women than men, even though both have equal qualifications. Now, you ask:

“Is the problem with my algorithm, or is it with the data I fed it?”

That’s where the difference between algorithmic bias and data bias matters.

If you don’t know which type of bias is at play, you might “fix” the wrong thing — and still end up with unfair results.

- Algorithmic bias is about the way the rules of the system or its design create unfairness.

- Data bias is about the information you train it on being skewed, incomplete, or unrepresentative.

The two are often tangled together — but they are not the same disease, and they need different treatments.

What Is Algorithmic Bias?

Algorithmic bias happens when the logic, design, or mathematical operations of the algorithm itself lead to outcomes that are systematically unfair.

This bias can creep in even if the data is perfect.

Key point: The rules of decision-making themselves are flawed in a way that disadvantages certain groups.

How It Happens

Algorithmic bias can arise in several ways:

- Flawed model design – If the algorithm is built in a way that inherently favors certain patterns or variables over others.

- Choice of features – Including or excluding certain variables can unintentionally skew results.

- Weighting errors – Giving more importance to some variables than is justified can favor one outcome.

- Objective function issues – If the algorithm’s “goal” (like maximizing profit) ignores fairness, it can create biased results.

Example

Suppose an algorithm decides who gets a loan. The designers choose an objective function that maximizes the bank’s profit without considering fairness. The model may learn to reject applicants from certain postal codes — not because those people are bad borrowers, but because historically, those areas had fewer approved loans. Here, even if we gave it perfect data, the decision-making rule would still discriminate.

Research Insight

In a famous 2016 ProPublica investigation, the COMPAS recidivism algorithm was found to predict higher re-offense risks for Black defendants than white defendants, even when they had similar criminal histories. Some of this came from algorithmic design choices, like how the risk score was calculated and what trade-offs were prioritized.

What Is Data Bias?

Data bias happens when the information used to train, validate, or test an algorithm is not an accurate reflection of the real world or of the population it’s supposed to serve.

Key point: Even the most perfectly designed algorithm will give biased results if it is trained on biased data.

How It Happens

Data bias can appear in many forms:

- Sampling bias – The training data doesn’t represent all groups equally.

- Historical bias – The data reflects past prejudices or inequalities.

- Measurement bias – Errors or inconsistencies in how data is recorded.

- Label bias – The way outcomes are labeled in the dataset can reflect human prejudice.

Example

Let’s go back to the hiring AI scenario. If your training data is based on 20 years of company hiring history — and historically, the company mostly hired men — the AI might learn that “being male” is associated with “being hired.” The algorithm may then reject female candidates, even if it has no direct “gender” field.

The bias here is baked into the data, not the algorithm’s logic.

Research Insight

A 2018 MIT study found that popular facial recognition systems had much higher error rates for darker-skinned women than for lighter-skinned men. The problem? The datasets used to train the models were overwhelmingly made up of light-skinned male faces. This is a classic case of data bias.

Core Differences Between Algorithmic Bias and Data Bias

Now that we understand each type, let’s compare them head-to-head.

How Algorithmic Bias Interacts with Data Bias

Algorithmic bias and data bias often work together, like a feedback loop. If the training data is biased—say, it favors one group—the algorithm learns that pattern. Then, the algorithm may make unfair decisions based on those patterns. Even if the algorithm seems neutral, it can still reflect and amplify the bias hidden in the data.

Here’s the tricky part — algorithmic bias and data bias often reinforce each other.

If your data is biased and your algorithm design is flawed, the bias gets amplified.

Example:

- Your training data contains mostly male candidates for tech jobs (data bias).

- Your algorithm also happens to overemphasize certain résumé keywords historically used more by men (algorithmic bias).

- The result? An even stronger bias against women than either problem would cause alone.

Detecting and Measuring Bias

Bias detection requires different strategies depending on whether you’re looking for algorithmic bias or data bias.

Detecting Algorithmic Bias

- Test with balanced datasets – Feed the algorithm data that is perfectly representative and see if bias still appears.

- Fairness metrics – Compare false positive and false negative rates across groups.

- Transparency in logic – Review the mathematical rules, feature weights, and decision thresholds.

Detecting Data Bias

- Data audits – Check the demographic breakdown and representation in the dataset.

- Sampling analysis – Ensure that all subgroups are proportionally represented.

- Historical review – Look for patterns in the data that reflect past discrimination.

How to Fix Algorithmic Bias and Data Bias in AI

Bias in AI can come from two main sources — the algorithm’s design or the data it learns from.

Fixing algorithmic bias means improving the decision-making rules of the model. You can redesign the objective function to include fairness goals, adjust feature weights so they don’t overvalue sensitive factors, or use fairness-aware techniques like adversarial debiasing. The goal is to make sure the algorithm’s logic doesn’t inherently favor or harm certain groups.

On the other hand, fixing data bias is about improving the quality and balance of your dataset. This could involve collecting more representative samples, balancing the dataset by oversampling or undersampling, or anonymizing sensitive details that might influence unfair outcomes. In some cases, data augmentation can help fill gaps for underrepresented groups.

The big difference is where you focus the fix — the “recipe” (algorithm) or the “ingredients” (data). In real-world projects, both types often exist together, so the smartest approach is to audit both the dataset and the algorithm before launch. That way, you can catch hidden unfairness early and ensure your AI is not just accurate, but also fair and ethical.

Let’s summarize!

The way you fix bias depends entirely on whether it’s algorithmic or data-driven.

Fixing Algorithmic Bias

- Redesign the objective function to include fairness constraints.

- Adjust feature weights to avoid overemphasis on sensitive attributes.

- Introduce algorithmic debiasing techniques like adversarial debiasing or fairness-aware regularization.

Fixing Data Bias

- Collect more representative data.

- Balance the dataset through oversampling or undersampling.

- Remove or anonymize sensitive variables.

- Use data augmentation to fill in underrepresented groups.

Why People Confuse the Algorithmic Bias with Data Bias

People often confuse algorithmic bias with data bias because both lead to unfair outcomes. Data bias comes from flawed or incomplete training data. Algorithmic bias happens when the system’s design or rules amplify those flaws. Since algorithms learn from data, people mistakenly think the bias is only in the algorithm.

In real-world AI systems, bias often has both components at the same time. When results look discriminatory, it’s tempting to blame the algorithm — but in many cases, the algorithm is just faithfully learning the patterns in the data.

Think of it like a cooking analogy:

- Data bias is like bad ingredients — no matter how good the recipe, the meal will taste bad.

- Algorithmic bias is like a flawed recipe — even the best ingredients will come out wrong.

The Ethical and Legal Stakes

Understanding this difference isn’t just technical — it has ethical and legal implications.

Laws like the EU’s AI Act and various U.S. state regulations require developers to identify and mitigate bias. But if you don’t know whether your bias is algorithmic or data-driven, compliance becomes guesswork.

Conclusion — A Practical Checklist

Algorithmic bias comes from the way an AI is built, while data bias comes from the information it learns from. Both can cause unfair results.

To create fair AI, we need to check the design and the data. Fixing both sides helps build systems that are accurate, ethical, and trustworthy.

Before deploying an AI system, ask:

- Is my data representative and fair? (Avoid data bias.)

- Does my algorithm’s design promote fairness? (Avoid algorithmic bias.)

- Have I tested for bias on multiple levels?

- If bias exists, do I know where it’s coming from?

By separating these two types of bias, we can make AI systems that are not only smart but also just.